Google is aggressively expanding its artificial intelligence infrastructure, unveiling powerful new chips and securing a multi-billion dollar deal with Anthropic, all while challenging Nvidia’s dominance in the AI accelerator market. The announcements center on the new seventh-generation Tensor Processing Unit (TPU) and expanded options for Arm-based Axion processors, reflecting a shift in the industry towards serving already-trained AI models to billions of users, rather than solely training them.

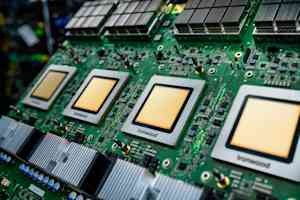

Google’s Newest Chip: Ironwood

At the core of this expansion is the Ironwood chip, Google’s latest custom AI accelerator. It boasts over four times the performance of its predecessor for both training and inference workloads. A single “pod” of Ironwood chips can connect up to 9,216 chips via Google’s proprietary Inter-Chip Interconnect, creating a supercomputer capable of sharing 1.77 petabytes of High Bandwidth Memory— roughly equivalent to 40,000 Blu-ray movies. Key features include Optical Circuit Switching (OCS), which automatically reroutes data traffic around failures with minimal disruption, and a system-level co-design approach that optimizes hardware and software together.

Anthropic’s Billion-Dollar Commitment

The most significant validation of Ironwood’s capabilities comes from Anthropic, the AI safety company behind the Claude family of models. They have committed to accessing up to one million TPU chips, a deal estimated to be worth tens of billions of dollars— among the largest known cloud infrastructure commitments in history. This investment will enable Anthropic to scale its compute capacity and maintain the speed and reliability their customers expect.

Axion Processors: Complementing Specialized AI

Alongside Ironwood, Google introduced expanded options for its Axion processor family, custom Arm-based CPUs designed for general-purpose workloads supporting AI applications. The N4A instance type targets microservices and other workloads critical to AI applications, delivering up to 2X better price-performance than comparable x86 virtual machines. A new C4A metal instance provides dedicated physical servers for specialized workloads.

The AI Hypercomputer: Software and Integration

Google emphasizes that hardware performance alone is insufficient; it requires optimized software. They’re offering the “AI Hypercomputer,” an integrated system bringing together compute, networking, storage, and software. This system has reportedly delivered a 353% three-year return on investment for customers. Key software enhancements include advanced maintenance for TPU clusters, open-source MaxText framework support for advanced training techniques, and an Inference Gateway that intelligently load-balances requests to reduce latency and serving costs.

Addressing the Infrastructure Challenge: Power and Cooling

Google recognizes the massive physical infrastructure challenges posed by this expansion. They’re implementing +/-400 volt direct current power delivery capable of supporting up to one megawatt per rack and contributing their fifth-generation cooling distribution unit design to the Open Compute Project. This includes leveraging liquid cooling, which can transport 4,000 times more heat than air, crucial for increasingly power-intensive AI chips.

Challenging Nvidia’s Dominance

Google’s announcements represent a bold challenge to Nvidia’s overwhelming dominance (estimated 80-95% market share) in the AI accelerator market. While custom silicon development requires significant investment and faces software ecosystem challenges, Google argues that tight integration from model research to chip design enables unique optimizations.

Key Takeaways

Google’s latest moves highlight a crucial shift in the AI landscape, with cloud providers increasingly focused on efficiently deploying trained AI models at scale. The company’s new chips, massive deal with Anthropic, and focus on integrated hardware and software aim to reshape the competitive dynamics of the AI infrastructure market and provide a viable alternative to Nvidia’s GPUs. The industry’s ability to sustain this level of investment and the pace of architectural innovation will be critical to watch in the coming months.